-

Články

- Vzdělávání

- Časopisy

Top články

Nové číslo

- Témata

- Kongresy

- Videa

- Podcasty

Nové podcasty

Reklama- Kariéra

Doporučené pozice

Reklama- Praxe

Epidemiology and Reporting Characteristics of Systematic Reviews of Biomedical Research: A Cross-Sectional Study

In a cross-sectional manuscript analysis, David Moher and colleagues score the prevalence, quality of conduct and completeness of reporting among systematic reviews published across medical disciplines in 2014.

Published in the journal: . PLoS Med 13(5): e32767. doi:10.1371/journal.pmed.1002028

Category: Research Article

doi: https://doi.org/10.1371/journal.pmed.1002028Summary

In a cross-sectional manuscript analysis, David Moher and colleagues score the prevalence, quality of conduct and completeness of reporting among systematic reviews published across medical disciplines in 2014.

Introduction

Biomedical and public health research is conducted on a massive scale; for instance, more than 750,000 publications were indexed in MEDLINE in 2014 [1]. Systematic reviews (SRs) of research studies can help users make sense of this vast literature, by synthesizing the results of studies that address a particular question, in a rigorous and replicable way. By examining the accumulated body of evidence rather than the results of single studies, SRs can provide more reliable results for a range of health care enquiries (e.g., what are the benefits and harms of therapeutic interventions, what is the accuracy of diagnostic tests) [2,3]. SRs can also identify gaps in knowledge and inform future research agendas. However, a SR may be of limited use to decision makers if the methods used to conduct the SR are flawed, and reporting of the SR is incomplete [4,5].

Moher et al. previously investigated the prevalence of SRs in the biomedical literature and their quality of reporting [6]. A search of MEDLINE in November 2004 identified 300 SRs indexed in that month, which corresponded to an annual publication prevalence of 2,500 SRs. The majority of SRs (71%) focused on a therapeutic question (as opposed to a diagnosis, prognosis, or epidemiological question), and 20% were Cochrane SRs. The reporting quality varied, with only 66% reporting the years of their search, 69% assessing study risk of bias/quality, 50% using the term “systematic review” or “meta-analysis” in the title or abstract, 23% formally assessing evidence for publication bias, and 60% reporting the funding source of the SR.

The publication landscape for SRs has changed considerably in the subsequent decade. Major events include the publication of the PRISMA reporting guidelines for SRs [7,8] and SR abstracts [9] and their subsequent endorsement in top journals, the launch of the Institute of Medicine’s standards for SRs of comparative effectiveness research [10], methodological developments such as a new tool to assess the risk of bias in randomized trials included in SRs [11], and the proliferation of open-access journals to disseminate health and medical research findings, in particular Systematic Reviews, a journal specifically for completed SRs, their protocols, and associated research [12]. Other studies have examined in more recent samples either the prevalence of SRs (e.g., [13]) or reporting characteristics of SRs in specific fields (e.g., physical therapy [14], complementary and alternative medicine [15], and radiology [16]). However, to our knowledge, since the 2004 sample, there has been no cross-sectional study of the characteristics of SRs across different specialties. Therefore, we considered it timely to explore the prevalence and focus of a more recent cross-section of SRs, and to assess whether reporting quality has improved over time.

The primary objective of this study was to investigate the epidemiological and reporting characteristics of SRs indexed in MEDLINE during the month of February 2014. Secondary objectives were to explore (1) how the characteristics of different types of reviews (e.g., therapeutic, epidemiology, diagnosis) vary; (2) whether the reporting quality of therapeutic SRs is associated with whether a SR was a Cochrane review and with self-reported use of the PRISMA Statement; and (3) how the current sample of SRs differs to the sample of SRs in 2004.

Methods

Study Protocol

We prespecified our methods in a study protocol (S1 Protocol).

Eligibility Criteria

We included articles that we considered to meet the PRISMA-P definition of a SR [17,18], that is, articles that explicitly stated methods to identify studies (i.e., a search strategy), explicitly stated methods of study selection (e.g., eligibility criteria and selection process), and explicitly described methods of synthesis (or other type of summary). We did not exclude SRs based on the type of methods they used (e.g., an assessment of the validity of findings of included studies could be reported using a structured tool or informally in the limitations section of the Discussion). Also, we did not exclude SRs based on the level of detail they reported about their methods (e.g., authors could present a line-by-line Boolean search strategy or just list the key words they used in the search). Further, we included articles regardless of the SR question (e.g., therapeutic, diagnostic, etiology) and the types of studies included (e.g., quantitative or qualitative). We included only published SRs that were written in English, to be consistent with the previous study [6].

We used the PRISMA-P definition of SRs because it is in accordance with the definition reported in the PRISMA Statement [7] and with that used by Cochrane [19], by the Agency for Healthcare Research and Quality’s Evidence-based Practice Centers Program [20], and in the 2011 guidance from the Institute of Medicine [10]. Further, the SR definition used by Moher et al. for the 2004 sample (“the authors’ stated objective was to summarize evidence from multiple studies and the article described explicit methods, regardless of the details provided”) [6] ignores the evolution of SR terminology over time.

We excluded the following types of articles: narrative/non-systematic literature reviews; non-systematic literature reviews with meta-analysis or meta-synthesis, where the authors conducted a meta-analysis or meta-synthesis of studies but did not use SR methods to identify and select the studies; articles described by the authors as “rapid reviews” or literature reviews produced using accelerated or abbreviated SR methods; overviews of reviews (or umbrella reviews); scoping reviews; methodology studies that included a systematic search for studies to evaluate some aspect of conduct/reporting (e.g., assessments of the extent to which all trials published in 2012 adhered to the CONSORT Statement); and protocols or summaries of SRs.

Searching

We searched for SRs indexed throughout one calendar month. We selected February 2014, as it was the month closest to when the protocol for this study was drafted. We searched Ovid MEDLINE In-Process & Other Non-Indexed Citations and Ovid MEDLINE 1946 to Present using the search strategy reported by Moher et al. [6]: (1) 201402$.ed; (2) limit 1 to English; (3) 2 and (cochrane database of systematic reviews.jn. or search.tw. or metaanalysis.pt. or medline.tw. or systematic review.tw. or ((metaanalysis.mp,pt. or review.pt. or search$.tw.) and methods.ab.)).

This search strategy retrieved records that were indexed, rather than published, in February 2014. An information specialist (M. S.) ran a modified search strategy to retrieve records in each of the 3 mo prior to and following February 2014, which showed that the number of records entered into MEDLINE during February 2014 was representative of these other months.

Screening

Screening was undertaken using online review software, DistillerSR. A form for screening of titles and abstracts (see S1 Forms) was used after being piloted on three records. Subsequently, three reviewers (M. J. P., L. S., and L. L.) screened all titles and abstracts using the method of liberal acceleration, whereby two reviewers needed to independently exclude a record for it to be excluded, while only one reviewer needed to include a record for it to be included. We retrieved the full text article for any citations meeting our eligibility criteria or for which eligibility remained unclear. A form for screening full text articles (see S1 Forms) was also piloted on three articles. Subsequently, two authors (of M. J. P., L. S., L. L., R. S.-O., E. K. R., and J. T.) independently screened each full text article. Any discrepancies in screening of titles/abstracts and full text articles were resolved via discussion, with adjudication by a third reviewer if necessary. In both these rounds of screening, articles were considered a SR if they met the Moher et al. [6] definition of a SR. Each full text article marked as eligible for inclusion was then screened a final time by one of two authors (M. J. P. or L. S.) to confirm that the article was consistent with the PRISMA-P 2015 definition of a SR (using the screening form in S1 Forms).

Data Extraction and Verification

We performed data extraction on a random sample of 300 of the included SRs, which were selected using the random number generator in Microsoft Excel. We selected 300 SRs to match the number used in the 2004 sample. Sampling was stratified so that the proportion of Cochrane reviews in the selected sample equaled that in the total sample. Data were collected in DistillerSR using a standardized data extraction form including 88 items (see S1 Forms). The items were based on data collected in two previous studies [6,15] and included additional items to capture some issues not previously examined. All data extractors piloted the form on three SRs to ensure consistency in interpretation of data items. Subsequently, data from each SR were extracted by one of five reviewers (M. J. P., L. S., F. C.-L., R. S.-O., or E. K. R.). Data were extracted from both the article and any web-based appendices available.

At the end of data extraction, a 10% random sample of SRs (n = 30 SRs) was extracted independently in duplicate. Comparison of the data extracted revealed 42 items where a discrepancy existed between two reviewers on at least one occasion (items marked in S1 Forms). All discrepancies were resolved via discussion. To minimize errors in the remaining sample of SRs, one author (M. J. P.) verified the data for these 42 items in all SRs. Also, one author (M. J. P.) reviewed the free text responses of all items with an “Other (please specify)” option. Responses were modified if it was judged that one of the forced-choice options was a more appropriate selection.

Data Analysis

All analyses were performed using Stata version 13 software [21]. Data were summarized as frequency and percentage for categorical items and median and interquartile range (IQR) for continuous items. We analyzed characteristics of all SRs and SRs categorized as Cochrane therapeutic (treatment/prevention), non-Cochrane therapeutic, epidemiology (e.g., prevalence, association between exposure and outcome), diagnosis/prognosis (e.g., diagnostic test accuracy, clinical prediction rules), or other (education, psychometric properties of scales, cost of illness). We anticipated that these different types of SRs would differ in the types of studies included (e.g., therapeutic SRs would more likely include randomized trials than epidemiology SRs). However, we considered nearly all of the other epidemiological and reporting characteristics as equally applicable to all types of SRs (i.e., all SRs, regardless of focus, should describe the methods of study identification, selection, appraisal, and synthesis). We have indicated in tables when a characteristic was not applicable (e.g., reporting of harms of interventions was only considered in therapeutic SRs). We also present, for all characteristics measured in the Moher et al. [6] sample, the percentage of SRs with each characteristic in 2004 compared with in 2014.

We explored whether the reporting of 26 characteristics of therapeutic SRs was associated with a SR being a Cochrane review and with self-reported use of the PRISMA Statement to guide conduct/reporting. The 26 characteristics were selected because they focused on whether a characteristic was reported or not (e.g., “Were eligible study designs stated?”) rather than on the detail provided (e.g., “Which study designs were eligible?”). We also explored whether the reporting of 15 characteristics of all SRs differed between the 2004 and 2014 samples (only 15 of the 26 characteristics were measured in both samples). Associations were quantified using the risk ratio, with 95% confidence intervals. The risk ratio was calculated because it is generally more interpretable than the odds ratio [22]. For the analysis of the association of reporting of characteristics with PRISMA use, we included only therapeutic SRs because PRISMA was designed primarily for this type of SR, and we excluded Cochrane SRs because they are supported by software that promotes good reporting.

The analyses described above were all prespecified before analyzing any data. The association between reporting and self-reported use of the PRISMA Statement was not included in our study protocol; it was planned following the completion of data extraction and prior to analysis. We planned to explore whether reporting characteristics of non-Cochrane SRs that were registered differed to non-Cochrane SRs that were unregistered, and whether reporting differed in SRs with a protocol compared to SRs without a protocol. However, there were too few SRs with a registration record (n = 12) or a SR protocol (n = 5) to permit reliable comparisons.

We performed a post hoc sensitivity analysis to see if the estimated prevalence of SRs was influenced by including articles that met the definition of a SR used by Moher et al. [6] but not the more explicit PRISMA-P 2015 definition.

Results

Search Results

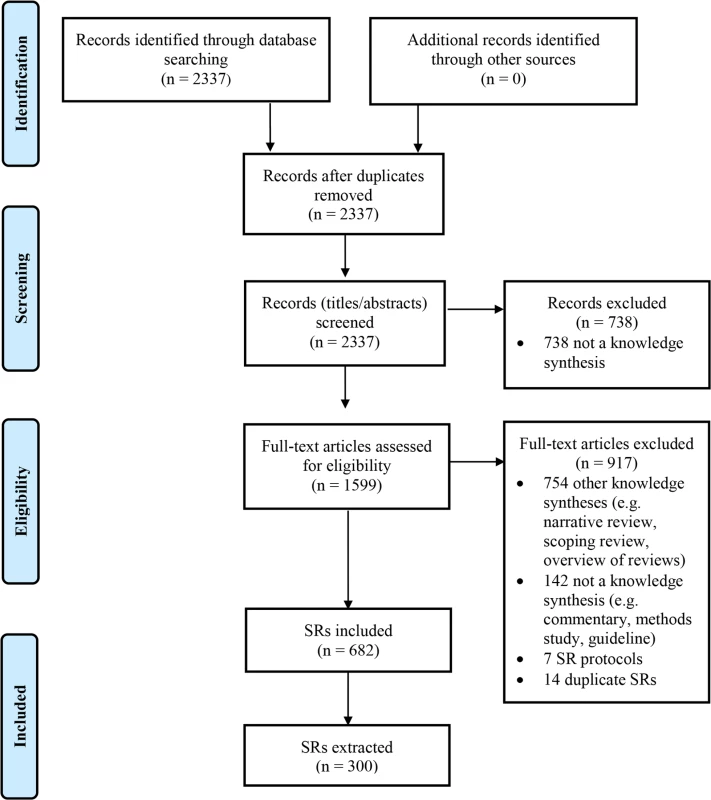

There were 2,337 records identified by the search (Fig 1). Screening of titles and abstracts led to the exclusion of 738 records. Of the 1,599 full text articles retrieved, 917 were excluded; most articles were not SRs but rather another type of knowledge synthesis (e.g., narrative review, scoping review, overview of reviews).

Fig. 1. Flow diagram of identification, screening, and inclusion of SRs.

Prevalence of SRs

We identified 682 SRs that were indexed in MEDLINE in February 2014. This figure suggests an annual publication rate of more than 8,000 SRs, which is equivalent to 22 SRs per day and is a 3-fold increase over what was observed in 2004 [6] (see calculations in S1 Results). One hundred (15%) of the SRs were Cochrane reviews. We identified 87 articles that were not counted in the final sample of 682 SRs but that would have met the less explicit definition of a SR used by Moher et al. [6] for the 2004 sample. In a sensitivity analysis, adding these to the final sample raised the SR prevalence to 769 SRs indexed in the month of February 2014, which is equivalent to more than 9,000 SRs per year and 25 SRs per day being indexed in MEDLINE.

Epidemiological Characteristics of SRs

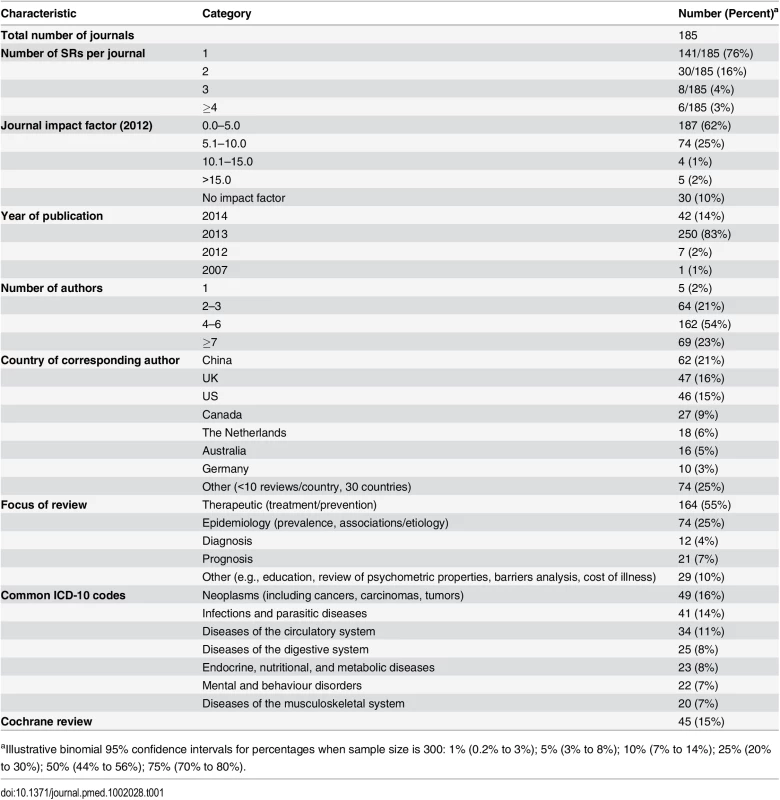

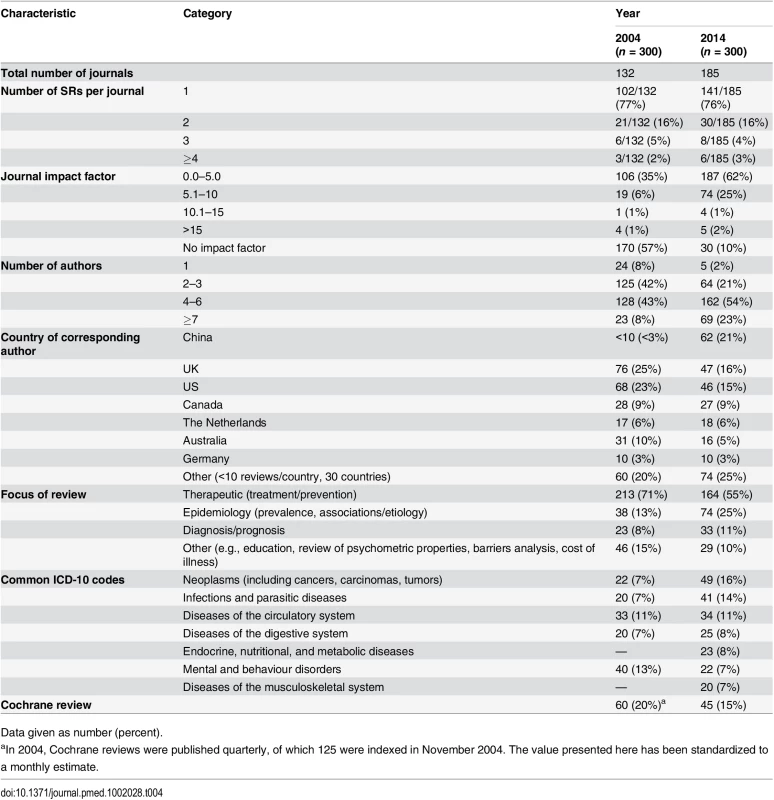

Data were collected on the random sample of 45 Cochrane and 255 non-Cochrane SRs (Table 1). The 300 SRs were published in 185 journals, most of which published only one SR during the month (141/185 [76%]) and had an impact factor less than 5.0 (187/300 [62%]). Only 5/300 (2%) SRs were published in journals with a high impact factor (i.e., Thomson ISI Journal Impact Factor 2012 > 15.0). Most of the SRs (250/300 [83%]) were published in the latter half of 2013. The median number of authors was five (IQR 4–6), and only 5/300 (2%) SRs were conducted by one author. The corresponding authors were based most commonly in China, the UK, or the US; corresponding authors from these three countries were responsible for 155/300 (52%) of the examined SRs. Just over half of the SRs (164/300 [55%]) were classified as therapeutic, 74/300 (25%) as epidemiology, 33/300 (11%) as diagnosis/prognosis, and 29/300 (10%) as other. All Cochrane SRs focused on a therapeutic question. There was wide diversity in the clinical conditions investigated; 20 ICD-10 codes were recorded across the SRs, with neoplasms, infections and parasitic diseases, and diseases of the circulatory system the most common (each investigated in >10% of SRs). All SRs were written in English.

Tab. 1. Epidemiology of 300 systematic reviews indexed in February 2014.

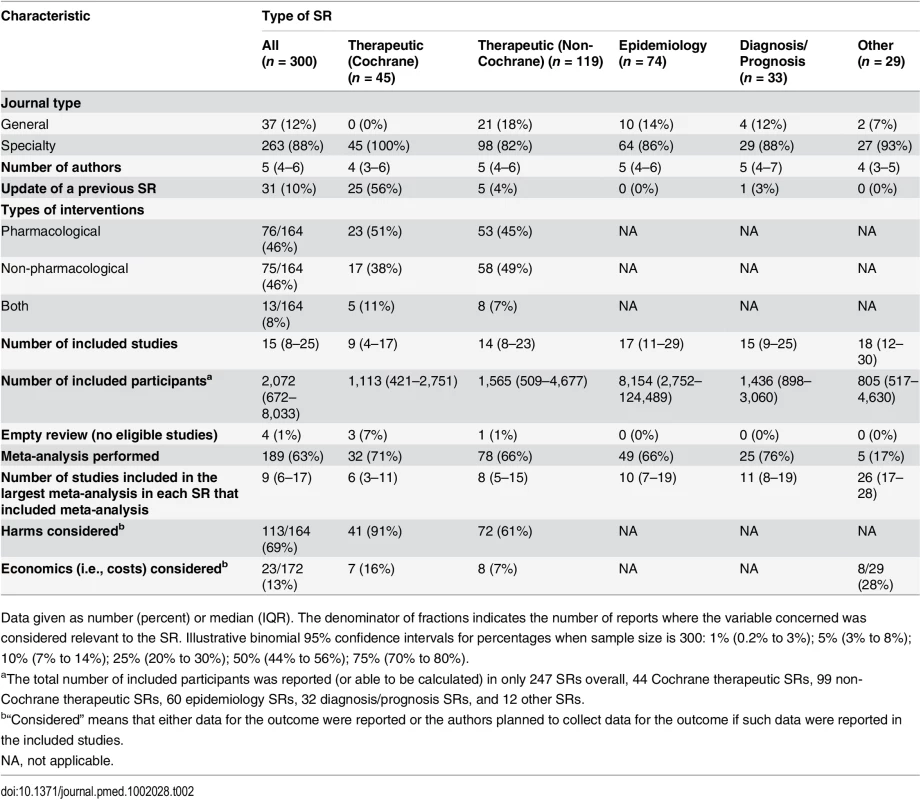

aIllustrative binomial 95% confidence intervals for percentages when sample size is 300: 1% (0.2% to 3%); 5% (3% to 8%); 10% (7% to 14%); 25% (20% to 30%); 50% (44% to 56%); 75% (70% to 80%). Most SRs (263/300 [88%]) were published in specialty journals (Table 2). Only 31/300 (10%) were updates of a previous SR. The majority of these were Cochrane SRs (25/31 [81%]); only one diagnosis/prognosis SR and no epidemiology SRs were described as an update. Of the therapeutic SRs, 76/164 (46%) investigated a pharmacological intervention, 75/164 (46%) investigated a non-pharmacological intervention, and 13/164 (8%) investigated both types of intervention. A median of 15 studies involving 2,072 participants were included in the SRs overall, but the number of studies and participants varied according to the type of SR. Cochrane SRs included fewer studies (median 9 versus 14 in non-Cochrane therapeutic SRs), and epidemiology SRs included a larger number of participants (median 8,154 versus 1,449 in non-epidemiology SRs). Only 4/300 (1%) SRs were “empty reviews” (i.e., identified no eligible studies). Meta-analysis was performed in 189/300 (63%) SRs, with a median of 9 (IQR 6–17) studies included in the largest meta-analysis in each SR that included one or more meta-analyses. Harms of interventions were collected (or planned to be collected) in 113/164 (69%) therapeutic SRs. Few SRs (23/172 [13%]) considered costs associated with interventions or illness.

Tab. 2. Epidemiology of systematic reviews indexed in February 2014, subgrouped by focus of SR.

Data given as number (percent) or median (IQR). The denominator of fractions indicates the number of reports where the variable concerned was considered relevant to the SR. Illustrative binomial 95% confidence intervals for percentages when sample size is 300: 1% (0.2% to 3%); 5% (3% to 8%); 10% (7% to 14%); 25% (20% to 30%); 50% (44% to 56%); 75% (70% to 80%). Reporting Characteristics of SRs

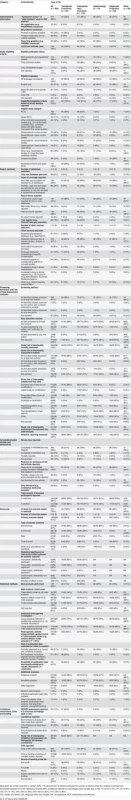

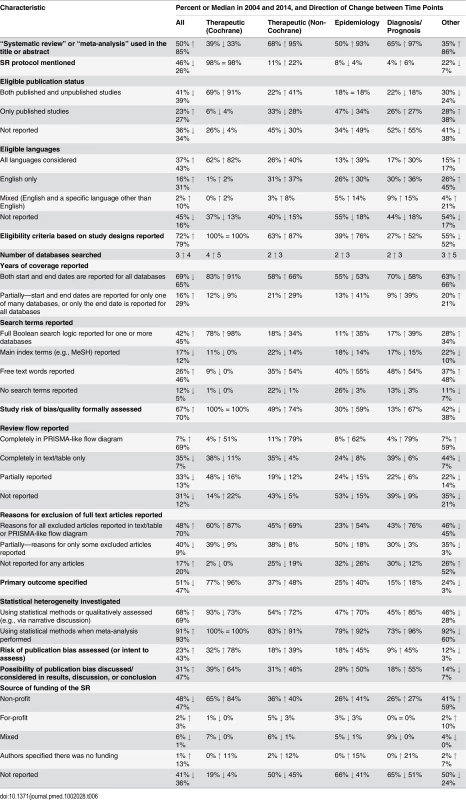

Here we summarize a subset of the characteristics of the SRs about which data were collected in this study (Table 3; data for all items are presented in S1 Results). Many SRs (254/300 [85%]) included the term “systematic review” or “meta-analysis” in the title or abstract. This percentage increased to 94% (239/255) when Cochrane SRs, which generally do not include these terms in their title, were omitted. A few SRs (12/300 [4%]) had been prospectively registered (e.g., in PROSPERO). In rather more SRs, authors mentioned working from a review protocol (77/300 [26%]), but a publicly accessible protocol was cited in only 49/300 (16%). These figures were driven almost entirely by Cochrane SRs; a publicly accessible protocol was cited in only 5/119 (4%) non-Cochrane therapeutic SRs and in no epidemiology, diagnosis/prognosis, or other SRs. Authors reported using a reporting guideline (e.g., PRISMA [7], MOOSE [23]) in 87/300 (29%) SRs. The purpose of these guidelines was frequently misinterpreted; in 45/87 (52%) of these SRs, it was stated that the reporting guideline was used to guide the conduct, not the reporting, of the SR (S1 Results). In 93/255 (36%) non-Cochrane SRs, authors reported using Cochrane methods (e.g., cited the Cochrane Handbook for Systematic Reviews of Interventions [19]).

Tab. 3. Reporting characteristics of systematic reviews indexed in February 2014 sample.

Data given as number (percent) or median (IQR). The denominator of fractions indicates the number of reports where the variable concerned was considered relevant to the SR. Illustrative binomial 95% confidence intervals for percentages when sample size is 300: 1% (0.2% to 3%); 5% (3% to 8%); 10% (7% to 14%); 25% (20% to 30%); 50% (44% to 56%); 75% (70% to 80%). Reporting of Study Eligibility Criteria

At least one eligibility criterion was reported in the majority of SRs, but there was wide variation in the content and quality of reporting. In 116/300 (39%) SRs, authors specified that both published and unpublished studies were eligible for inclusion, while a quarter restricted inclusion to published studies (80/300 [27%]). However, in 103/300 (34%) SRs, publication status criteria were not reported. Language criteria were reported in 252/300 (84%) SRs, with more SRs considering all languages (129/300 [43%]) than considering English only (92/300 [31%]). Study design inclusion criteria were stated in 237/300 (79%) SRs. Nearly all Cochrane SRs (40/45 [89%]) restricted inclusion to randomized or quasi-randomized controlled trials, whereas only 64/119 (54%) non-Cochrane therapeutic SRs did so. Epidemiology, diagnosis/prognosis, and other SRs included a range of study designs, mostly observational (e.g., cohort, case–control).

Reporting of Search Methods

A median of four electronic bibliographic databases (IQR 3–5) were searched by review authors. Only 28/300 (9%) SRs searched only one database, and nearly all of these were non-Cochrane therapeutic (n = 11) or epidemiology (n = 13) SRs. Years of coverage of the search were completely reported in 196/300 (65%) SRs, and a full Boolean search logic for at least one database was reported in 134/300 (45%). Authors searched a median of one (IQR 1–2) other source, of which reviewing the references lists of included studies was described most often (243/300 [81%]). Sources of unpublished data were infrequently searched, for example, grey literature databases such as OpenSIGLE (21/300 [7%]) or drug or device regulator databases such as Drugs@FDA (2/164 therapeutic SRs [1%]). Searching of trial registries (e.g., ClinicalTrials.gov) was reported in 58/300 (19%) SRs, more often in Cochrane therapeutic SRs (28/45 [62%]) than in non-Cochrane therapeutic SRs (24/119 [20%]).

Reporting of Screening, Data Extraction, and Risk of Bias Assessment Methods

There was no information in approximately a third of SRs on how authors performed screening (87/300 [29%]), data extraction (97/296 [33%]), or risk of bias assessment (76/206 [37%]) (i.e., number of SR authors performing each task, whether tasks were conducted independently by two authors or by one author with verification by another). Of the SRs that reported this information, very few (<4%) stated that only one author was responsible for these tasks. Risk of bias/quality assessment was formally conducted in 206/296 (70%) SRs, more often in therapeutic SRs (129/160 [81%]) than in epidemiology, diagnosis/prognosis, or other SRs (77/136 [57%]). Many different assessment tools were used. The Cochrane risk of bias tool for randomized trials [11] was the most commonly used tool in both Cochrane (37/42 [88%]) and non-Cochrane therapeutic SRs (36/87 [41%]). Author-developed tools were used in 38/206 (18%) SRs overall and were used more commonly than validated tools in epidemiology SRs (16/44 [36%] used a tool developed by the review authors, while 8/44 [18%] used the Newcastle–Ottawa Scale [24]). Risk of bias information was incorporated into the analysis (e.g., via subgroup or sensitivity analyses) in only 31/189 (16%) SRs with meta-analysis.

Reporting of Included/Excluded Studies and Participants

A review flow was reported in the majority of SRs (226/300 [75%]) and was most often presented in a PRISMA/QUOROM-like flow diagram (206/300 [69%]). Reasons for excluding studies were described in 211/300 (70%) SRs overall but were described less often in epidemiology (42/74 [57%]) and other (13/29 [45%]) SRs. At least one type of grey literature (e.g., conference abstract, thesis) was stated to have been included in 26/300 (9%) SRs. In many SRs, it was difficult to discern the total number of participants across the included studies, as this figure was described in the full text of only 194/296 (66%) SRs and in the abstract of only 147/296 (50%) SRs. Such information was infrequently reported in the SRs classified as other (4/29 [14%] in the full text and 3/29 [10%] in the abstract).

Reporting of Review Outcomes

Authors collected data on a median of four (IQR 2–6) outcomes; however, no outcomes were specified in the methods section of 66/300 (22%) SRs. An outcome was described in the SR as “primary” in less than half of the SRs (136/288 [47%]). Given that we did not seek SR protocols, it is unclear how often the primary outcome was selected a priori. Most primary outcomes were dichotomous (91/136 [67%]). Of the 99 therapeutic SRs with a primary outcome, a p-value or 95% confidence interval was reported for 88/99 (89%) of the primary outcome intervention effect estimates. Of these estimates, 53/88 (60%) were statistically significant and favourable to the intervention, while none were statistically significantly unfavourable to the intervention.

Reporting of Statistical Methods

Meta-analysis was performed in 189/300 (63%) SRs. The random-effects model was used more often than the fixed-effects model (89/189 [47%] versus 34/189 [18%]), and statistical heterogeneity was examined in nearly all of these SRs (175/189 [93%]). In a third of SRs (72/189 [38%]), it was stated that a heterogeneity statistic guided the choice of the meta-analysis model (e.g., random-effects model used if I2 > 50%). Methods commonly used to infer publication bias (e.g., funnel plot, test for small-study effects) were used in approximately a third of SRs (93/300 [31%]). Of these, only 53/93 (57%) SRs had a sufficient number of studies for the analysis (i.e., a meta-analysis that included at least ten studies [25]). It would have been inappropriate to use such methods in 169/300 (56%) SRs due to the lack of meta-analysis or insufficient number of studies. Authors discussed the possibility of publication bias (regardless of whether it was formally assessed) in 141/300 (47%) SRs. In just under half of the SRs with meta-analysis, authors reported subgroup analyses (87/189 [46%]) or sensitivity analyses (92/189 [49%]). Advanced meta-analytic techniques were rarely used, for example, network meta-analysis (7/300 [2%]) and individual participant data meta-analysis (2/300 [1%]).

Reporting of Limitations, Conclusions, Conflicts of Interest, and Funding

Few SRs included a GRADE assessment of the quality of the body of evidence (32/300 [11%]); nearly all of those that did were Cochrane SRs (27/32 [84%]). Limitations of either the review or included studies (or both) were stated in nearly all SRs (267/300 [89%]), although in 67/300 (22%) no review limitations were considered. Study limitations (including risk of bias/quality) were incorporated into the abstract conclusions of only 99/164 (60%) therapeutic SRs. Most review authors declared whether they had any conflicts of interest (260/300 [87%]). In contrast, only 21/296 (7%) reported the conflicts of interest or funding sources of the studies included in their SR. Almost half of the SRs were funded by a non-profit source (142/300 [47%]), while only 8/300 (3%) were clearly funded by for-profit sources. However, the funding source was not declared in a third of SRs (109/300 [36%]); this non-reporting was more common in non-Cochrane therapeutic (53/119 [45%]), epidemiology (30/74 [41%]), and diagnosis/prognosis SRs (17/33 [51%]) than in Cochrane therapeutic SRs (2/45 [4%]).

Influence of Cochrane Status and Self-Reported Use of PRISMA on Reporting Characteristics

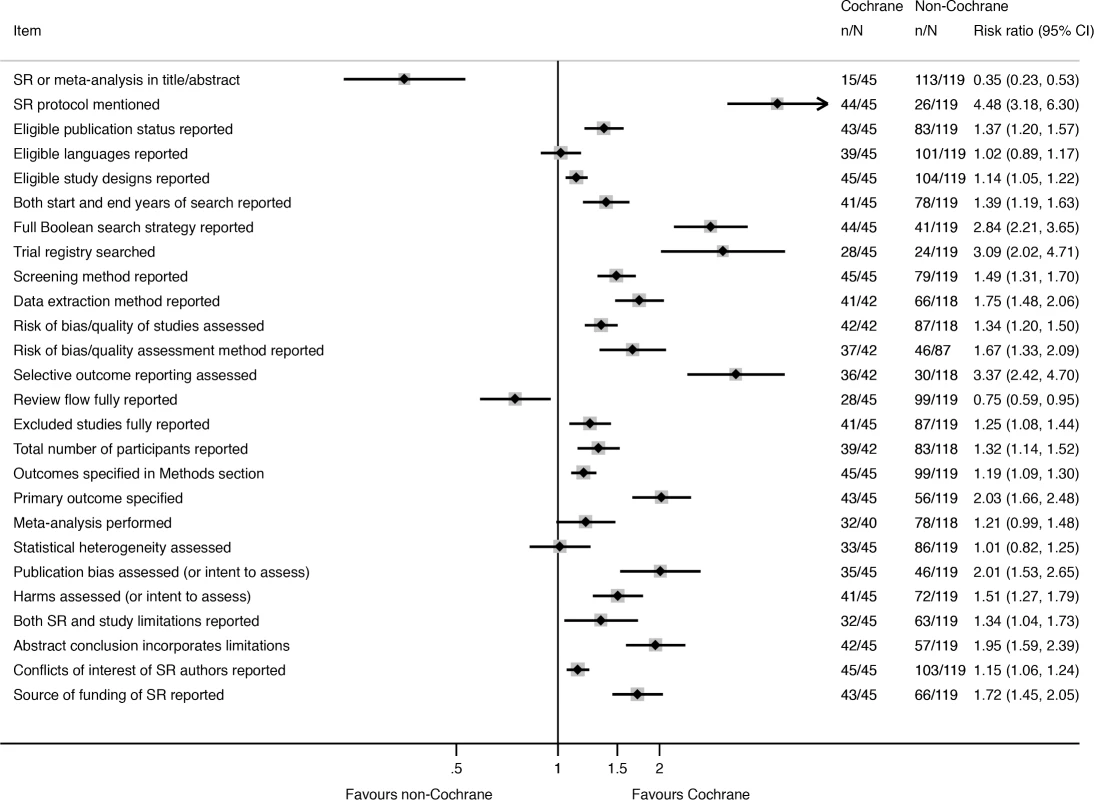

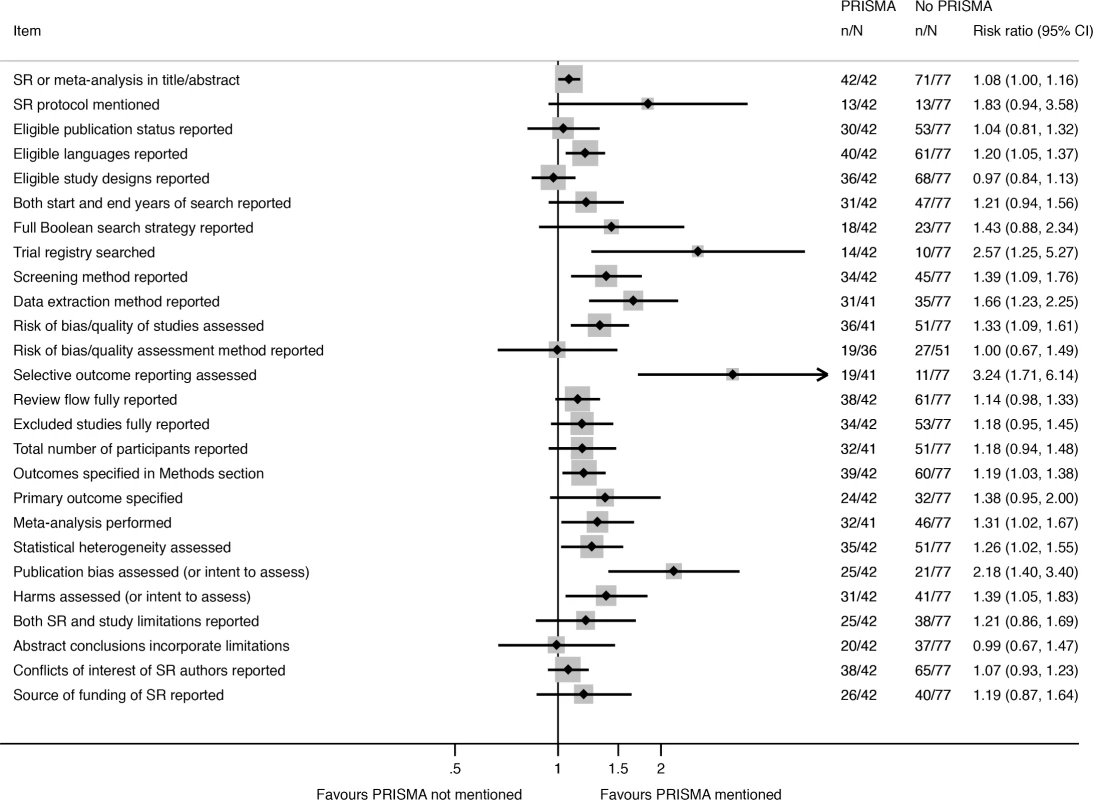

Nearly all of the 26 reporting characteristics of therapeutic SRs that we analyzed according to Cochrane status and self-reported use of the PRISMA Statement were reported more often in SRs that were produced by Cochrane (Fig 2) or that reported that they used the PRISMA Statement (Fig 3). The differences were larger and more often statistically significant in the Cochrane versus non-Cochrane comparison.

Fig. 2. Unadjusted risk ratio associations between reporting characteristics and type of SR: Cochrane versus non-Cochrane therapeutic SRs.

Fig. 3. Unadjusted risk ratio associations between reporting characteristics and self-reported use of PRISMA in non-Cochrane therapeutic SRs.

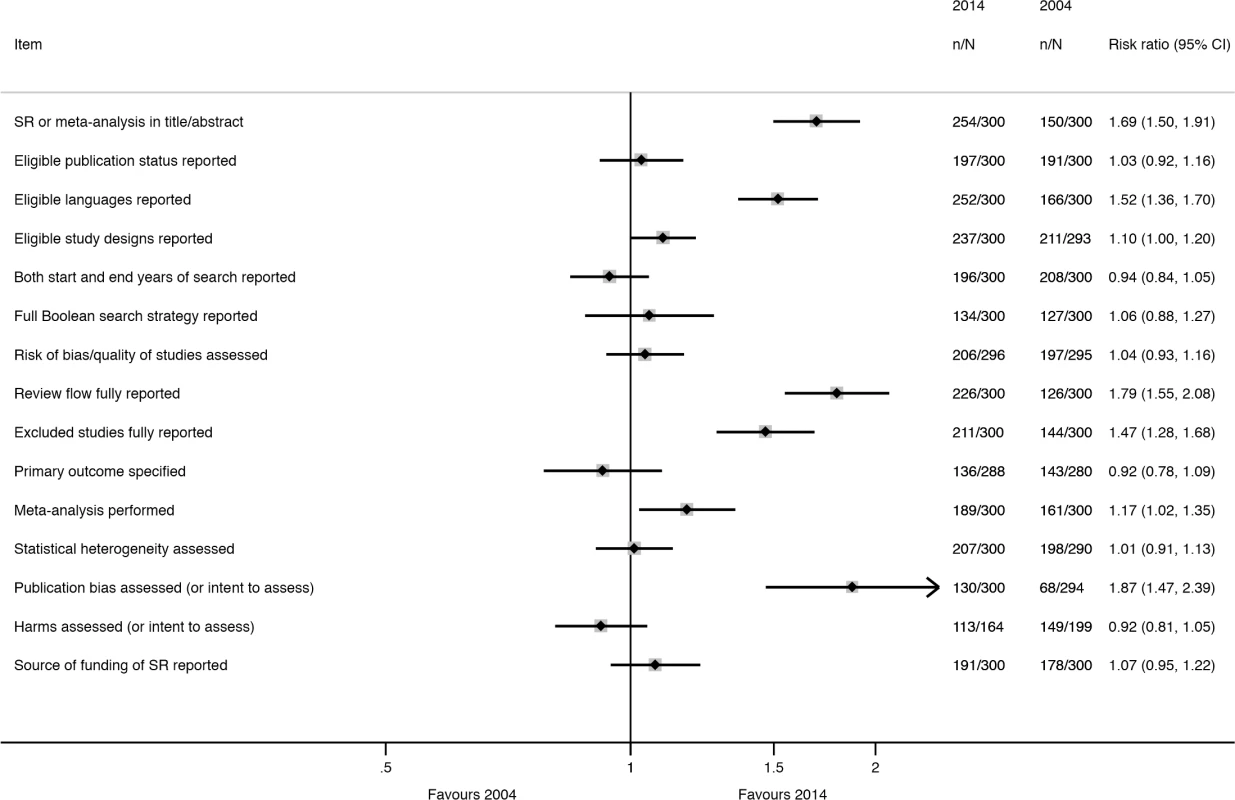

Comparison with 2004 Sample of SRs

The SRs we examined differed in several ways from the November 2004 sample. In 2014, review author teams were larger, and many more SRs were produced by Chinese authors (up from <3% of all SRs in 2004 to 21% in 2014) (Table 4). The proportion of therapeutic SRs decreased (from 71% to 55% of all SRs), coupled with a rise in epidemiological SRs over the decade (from 13% to 25%). Five ICD-10 categories—neoplasms, infections, diseases of the circulatory system, diseases of the digestive system, and mental and behaviour disorders—were the most common conditions in both samples, while two other categories were common in 2014: diseases of the musculoskeletal system and endocrine, nutritional, and metabolic diseases. The proportion of SRs that were Cochrane reviews slightly decreased (from 20% to 15% of all SRs).

Tab. 4. Comparison of the epidemiology of systematic reviews in 2004 and 2014.

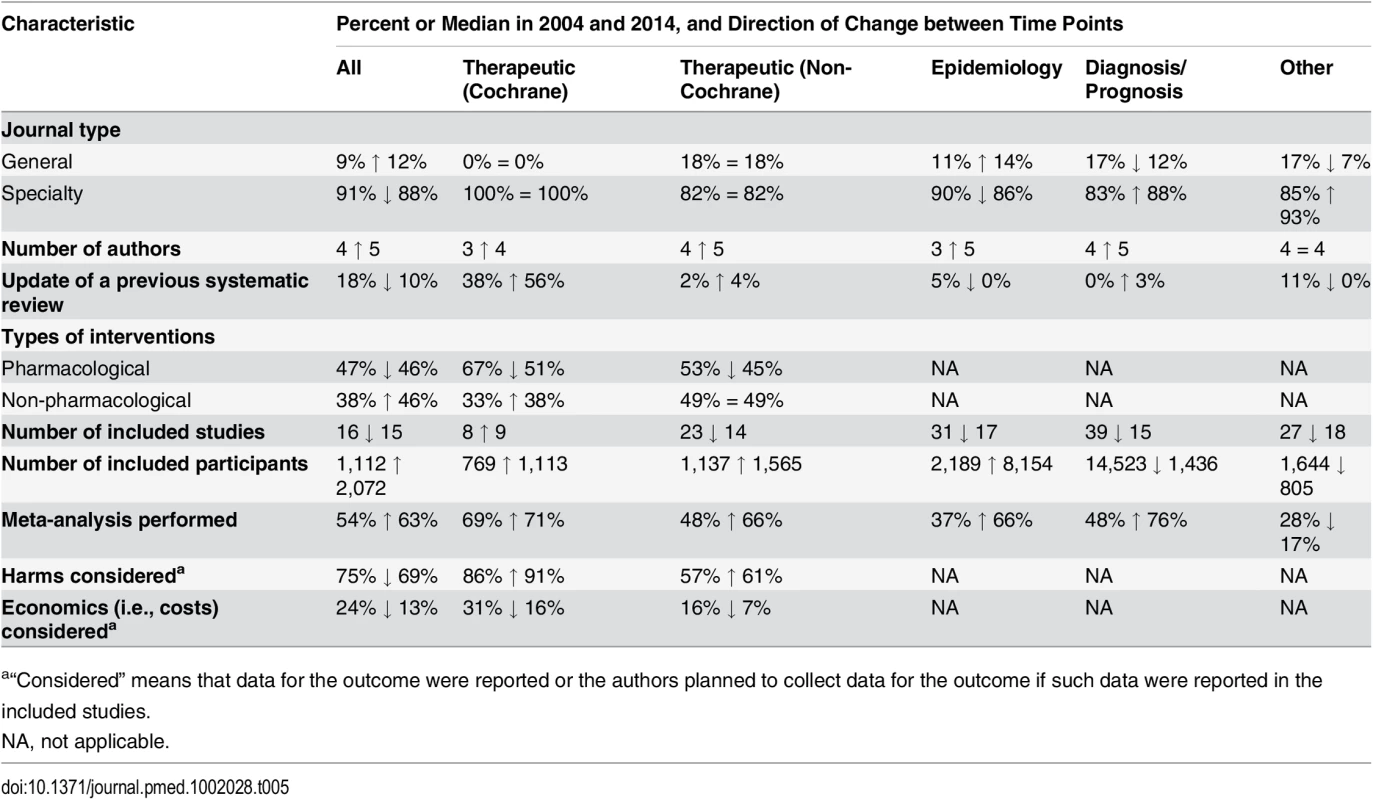

Data given as number (percent). There were more updates of previous Cochrane therapeutic SRs in the 2014 sample (38% in 2004 versus 56% in 2014) (Table 5). Also, more SRs included meta-analysis (52% in 2004 versus 63% in 2014). In contrast, there was a reduction in the median number of included studies in SRs in 2014, and in the percentage of therapeutic SRs considering harms (75% in 2004 versus 69% in 2014) or cost (24% versus 13%).

Tab. 5. Comparison of the epidemiological characteristics of systematic reviews in 2004 and 2014, subgrouped by focus of SR.

a“Considered” means that data for the outcome were reported or the authors planned to collect data for the outcome if such data were reported in the included studies. Many characteristics were reported more often in 2014 than in 2004 (Fig 4); however, the extent of improvement varied depending on the item and the type of SR (Table 6). The following were reported much more often regardless of the type of SR: eligible language criteria (55% of all SRs in 2004 versus 84% in 2014), review flow (42% versus 78% of all SRs), and reasons for exclusion of full text articles (48% versus 70% of all SRs). Risk of bias/quality assessment was reported more often in 2014 in non-Cochrane therapeutic (49% in 2004 versus 74% in 2014), epidemiology (30% versus 59%), and diagnosis/prognosis (13% versus 67%) SRs but less so in other SRs (42% in 2004 versus 38% in 2014). A primary outcome was specified more often in 2014 in non-Cochrane therapeutic SRs (37% in 2004 versus 48% in 2014) and epidemiology SRs (25% versus 40%) but much less often in other SRs (24% in 2004 versus 3% in 2014). Despite the large improvements for some SR types, many of the 2014 percentages are less than ideal. Further, there was little change or a slight worsening in the reporting of several features, including the mention of a SR protocol in non-Cochrane SRs (14% in 2004 versus 13% in 2014), presentation of a full Boolean search strategy for at least one database (42% versus 45% of all SRs), and reporting of funding source of the SR (59% versus 64% of all SRs).

Fig. 4. Unadjusted risk ratio associations between reporting characteristics and year: 2004 versus 2014.

Tab. 6. Comparison of the reporting characteristics of systematic reviews in 2004 and 2014.

Discussion

We estimate that more than 8,000 SRs are being indexed in MEDLINE annually, corresponding to a 3-fold increase over the last decade. The majority of SRs indexed in February 2014 addressed a therapeutic question and were conducted by authors based in China, the UK, or the US; they included a median of 15 studies involving 2,072 participants. Meta-analysis was performed in 63% of SRs, mostly using standard pairwise methods. Study risk of bias/quality assessment was performed in 70% of SRs, but rarely incorporated into the analysis (16%). Few SRs (7%) searched sources of unpublished data, and the risk of publication bias was considered in less than half of SRs. Reporting quality was highly variable; at least a third of SRs did not mention using a SR protocol or did not report eligibility criteria relating to publication status, years of coverage of the search, a full Boolean search logic for at least one database, methods for data extraction, methods for study risk of bias assessment, a primary outcome, an abstract conclusion that incorporated study limitations, or the funding source of the SR. Cochrane SRs, which accounted for 15% of the sample, had more complete reporting than all other types of SRs. Reporting has generally improved since 2004, but remains suboptimal for many characteristics.

Explanation of Results and Implications

The increase in SR production from 2004 to 2014 may be explained by several positive changes over the decade. The scientific community and health care practitioners may have increasingly recognized that the deluge of published research over the decade requires integration, and that a synthesis of the literature is more reliable than relying on the results of single studies. Some funding agencies (e.g., the UK National Institute for Health Research and the Canadian Institutes of Health Research) now require applicants to justify their applications for research funding with reference to a SR, which the applicants themselves must perform if one does not exist [26]. Further, some countries, particularly China, have developed a research culture that places a strong emphasis on the production of SRs [27]. Also, the development of free software to perform meta-analyses (e.g., RevMan [28], MetaXL [29], R [30,31]) has likely contributed to its increased use.

There are also some unsavory reasons for the proliferation of SRs. In recent years, some countries have initiated financial incentives to increase publication rates (e.g., more funding for institutions that publish more articles or cash bonuses to individuals per article published) [32]. Further, appointment and promotion committees often place great emphasis on the number of publications an investigator has, rather than on the rigor, transparency, and reproducibility of the research [4]. Coupled with the growing recognition of the value of SRs, investigators may be strongly motivated to publish a large number of SRs, regardless of whether they have the necessary skills to perform them well. In addition, the proliferation of new journals over the decade has made it more likely that authors can successfully submit a SR for publication regardless of whether one on the same topic has been published elsewhere. This has resulted in a large number of overlapping SRs (one estimate suggests 67% of meta-analyses have at least one overlapping meta-analysis within a 3-y period) [33]. Such overlap of SR questions is not possible in the Cochrane Database of Systematic Reviews, which may explain why the proportion of Cochrane SRs within the broader SR landscape has diminished.

The conduct of SRs was good in some respects, but not others. Examples of good conduct are that nearly all SRs searched more than one bibliographic database, and the majority performed dual-author screening, data extraction, and risk of bias assessment. However, few SRs searched sources of unpublished data (e.g., trial registries, regulatory databases), despite their ability to reduce the impact of reporting biases [34,35]. Also, an appreciable proportion of SRs (particularly epidemiology and diagnosis/prognosis SRs) did not assess the risk of bias/quality of the included studies. In addition, the choice of meta-analysis model in many SRs was guided by heterogeneity statistics (e.g., I2), a practice strongly discouraged by leading SR organizations because of the low reliability of these statistics [19,20]. It is therefore possible that some systematic reviewers inappropriately generated summary estimates, by ignoring clinical heterogeneity when statistical heterogeneity was perceived to be low. Further, a suboptimal number of therapeutic SRs considered the harms of interventions. It is possible that review authors did not comment on harms when none were identified in the included studies. However, reporting of both zero and non-zero harm events is necessary so that patients and clinicians can determine the risk–benefit profile of an intervention [36]. To reduce the avoidable waste associated with these examples of poor conduct of SRs, strategies such as formal training of biomedical researchers in research design and analysis and the involvement of statisticians and methodologists in SRs are warranted [4].

Cochrane SRs continue to differ from their non-Cochrane counterparts. Completeness of reporting is superior in Cochrane SRs, possibly due to the use of strategies in the editorial process that promote good reporting (such as use of the Methodological Expectations of Cochrane Intervention Reviews [MECIR] standards [37]). Also, word limits or unavailability of online appendices in some non-Cochrane journals may lead to less detailed reporting. Cochrane SRs tend to include fewer studies, which may be partly due to the reviews more often restricting inclusion to randomized trials only. However, fewer studies being included could also result from having a narrower review question (in terms of the patients, interventions, and outcomes that are addressed). Further research should explore the extent to which Cochrane and non-Cochrane SRs differ in scope, and hence applicability to clinical practice.

It is notable that reporting of only a few characteristics improved substantially over the decade. For example, the SRs in the 2014 sample were much more likely to present a review flow and reasons for excluded studies than SRs in the 2004 sample. This was most often done using a PRISMA flow diagram [7], suggesting that this component of the PRISMA Statement has been successfully adopted by the SR community. However, 2014 SRs were slightly less likely than their 2004 counterparts to identify an outcome as “primary” and to report both the start and end years of the search, and the number of SRs reporting the source of funding increased only marginally. We do not believe that the smaller changes in reporting of some characteristics is due to their receiving less emphasis in the original paper by Moher et al. [6] or the PRISMA Statement [7], because neither emphasized any characteristic over others. Therefore, more research is needed to determine which characteristics authors think are less important to report in a SR, and why.

Mention of the PRISMA Statement [7], perhaps a surrogate for actual use, appears to be associated with more complete reporting. However, reporting of many SRs remains poor despite the availability of the PRISMA Statement since 2009. There are several possible reasons for this. Some authors may still be unaware of PRISMA or assume that they already know how to report a SR completely. The extent to which journals endorse PRISMA is highly variable, with some explicitly requiring authors to submit a completed checklist at the time of manuscript submission, others only recommending its use in the instructions to authors, and many not referring to it at all [38,39]. Also, some PRISMA items include multiple elements (e.g., item 7 asks authors to describe the databases searched, whether authors were contacted to identify additional trials, the years of coverage of the databases searched, and the date of the last search). Some authors may assume that they have adequately addressed an item if they report at least one element. Also, authors may consider PRISMA only after spending hours drafting and refining their manuscript with co-authors, a point when they may be less likely to make the required changes [40].

Our findings suggest that strategies other than the passive dissemination of reporting guidelines are needed to address the poor reporting of SRs. One strategy is to develop software that facilitates the completeness of SR reporting [41]. For example, Barnes and colleagues recently developed an online writing tool based on the CONSORT Statement [40]. The tool is meant to be used by authors when writing the first draft of a randomized trial report and consists of bullet points detailing all the key elements of the corresponding CONSORT item(s) to be reported, with examples of good practice. Medical students randomly assigned to use the tool over a 4-h period reported trial methods more completely [40]; thus, a similar tool based on the PRISMA Statement is worth exploring. Also, journal editors could receive certified training in how to endorse and implement PRISMA and facilitate its use by peer reviewers [42]. Further, collaboration between key stakeholders (funders, journals, academic institutions) is needed to address poor reporting [26].

More research is needed on the risk of bias in SRs that is associated with particular methods. We observed that a considerable proportion of therapeutic SRs (40%) had potentially misleading conclusions because the limitations of the evidence on which the conclusions were based were not taken into consideration. This is a problem because some users of SRs only have access to the abstract and may be influenced by the misleading conclusions to implement interventions that are either ineffective or harmful [9]. We have not explored in this study the extent to which the results of the SRs we examined were biased. Such bias can occur for several reasons, including use of inappropriate eligibility criteria, failure to use methods that minimize error in data collection, selective inclusion of the most favourable results from study reports, inability to access unpublished studies, and inappropriate synthesis of clinically heterogeneous studies [43]. Determining how often the results of SRs are biased is important because major users of SRs such as clinical practice guideline developers tend to rely on the results (e.g., intervention effect estimates) rather than conclusions when formulating recommendations [44]. We only recorded whether methodological characteristics were reported or not, rather than evaluating how optimal each method was. Further, exploring whether non-reporting of a method is associated with biased results is problematic, because non-compliance with reporting guidelines is not necessarily an indicator of a SR’s methodological quality. That is, some review authors may use optimal methods but fail to clearly specify those methods for non-bias-related reasons (e.g., word limits). In future, investigators could apply to the SRs in our sample a tool such as the ROBIS tool [45], which guides appraisers to make judgements about the risk of bias at the SR level (rather than the study level) due to several aspects of SR conduct and reporting.

Strengths and Limitations

There are several strengths of our methods. We used a validated search filter to identify SRs, and screened each full text article twice to confirm that it met the eligibility criteria. Screening each article provides a more reliable estimate of SR prevalence than relying on the search filters for SRs, which we found retrieved many non-systematic reviews and other knowledge syntheses. We did not restrict inclusion based on the focus of the SR and, thus, unlike previous studies [14,15], were able to collect data on a broader cross-section of SRs.

There are also some limitations to our study. Our results reflect what was reported in the articles, and it is possible that some SRs were conducted more rigorously than was specified in the report, and vice versa. Our findings may not generalize to SRs indexed outside of MEDLINE or published in a language other than English. Two authors independently and in duplicate extracted data on only a 10% random sample of SRs. We attempted to minimize data extraction errors by independently verifying data for 42/88 “problematic items” (i.e., those where there was at least one discrepancy between two authors in the 10% random sample). We cannot exclude the possibility of errors in the non-verified data items, although we consider the risk to be low given that the error rate for these items was 0% in the random sample. Also, our results concerning some types of SRs (e.g., diagnosis/prognosis, other) were based on small samples, so should be interpreted with caution. Further, searching for articles indexed in MEDLINE, rather than published, during the specified time frame means that we examined a small number of SRs (8/300 [3%]) with more than a year’s delay in indexing after publication. However, inclusion of these few articles is unlikely to have affected our findings.

Some terminology contained within the PRISMA-P definition of a SR may be interpreted differently by different readers (e.g., “systematic search” and “explicit, reproducible methodology”). Hence, it is possible that others applying the PRISMA-P definition may have reached a slightly different estimate of SR prevalence than we did. We tried to address this by also reporting a SR prevalence that included articles consistent with the less explicit definition used by Moher et al. [6]. Also, any observed improvements in reporting since 2004 may partly be attributed to our use of a more stringent definition of SRs in 2014, which required articles to meet more minimum reporting requirements. Hence, we may have slightly overestimated the improvements in reporting from 2004 to 2014 and underestimated the true scale of poor reporting in SRs.

Conclusion

An increasing number of SRs are being published, and many are poorly conducted and reported. This is wasteful for several reasons. Poor conduct can lead to SRs with misleading results, while poor reporting prevents users from being able to determine the validity of the methods used. Strategies are needed to increase the value of SRs to patients, health care practitioners, and policy makers.

Supporting Information

Zdroje

1. US National Library of Medicine. Key MEDLINE indicators. 2015 [cited 1 Sep 2015]. Available: http://www.nlm.nih.gov/bsd/bsd_key.html.

2. Murad M, Montori VM. Synthesizing evidence: shifting the focus from individual studies to the body of evidence. JAMA. 2013;309 : 2217–2218. doi: 10.1001/jama.2013.5616 23736731

3. Murad MH, Montori VM, Ioannidis JP, Jaeschke R, Devereaux PJ, Prasad K, et al. How to read a systematic review and meta-analysis and apply the results to patient care: users’ guides to the medical literature. JAMA. 2014;312 : 171–179. doi: 10.1001/jama.2014.5559 25005654

4. Ioannidis JPA, Greenland S, Hlatky MA, Khoury MJ, Macleod MR, Moher D, et al. Increasing value and reducing waste in research design, conduct, and analysis. Lancet. 2014;383 : 166–175. doi: 10.1016/S0140-6736(13)62227-8 24411645

5. Glasziou P, Altman DG, Bossuyt P, Boutron I, Clarke M, Julious S, et al. Reducing waste from incomplete or unusable reports of biomedical research. Lancet. 2014;383 : 267–276. doi: 10.1016/S0140-6736(13)62228-X 24411647

6. Moher D, Tetzlaff J, Tricco AC, Sampson M, Altman DG. Epidemiology and reporting characteristics of systematic reviews. PLoS Med. 2007;4:e78. 17388659

7. Moher D, Liberati A, Tetzlaff J, Altman DG, The PRISMA Group. Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. PLoS Med. 2009;6:e1000097. doi: 10.1371/journal.pmed.1000097 19621072

8. Liberati A, Altman DG, Tetzlaff J, Mulrow C, Gotzsche PC, Ioannidis JP, et al. The PRISMA statement for reporting systematic reviews and meta-analyses of studies that evaluate healthcare interventions: explanation and elaboration. BMJ. 2009;339:b2700. doi: 10.1136/bmj.b2700 19622552

9. Beller EM, Glasziou PP, Altman DG, Hopewell S, Bastian H, Chalmers I, et al. PRISMA for abstracts: reporting systematic reviews in journal and conference abstracts. PLoS Med. 2013;10:e1001419. doi: 10.1371/journal.pmed.1001419 23585737

10. Institute of Medicine. Finding what works in health care: standards for systematic reviews. Washington (District of Columbia): National Academies Press; 2011.

11. Higgins JPT, Altman DG, Gøtzsche PC, Jüni P, Moher D, Oxman AD, et al. The Cochrane Collaboration’s tool for assessing risk of bias in randomised trials. BMJ. 2011;343:d5928. doi: 10.1136/bmj.d5928 22008217

12. Moher D, Stewart L, Shekelle P. Establishing a new journal for systematic review products. Syst Rev. 2012;1 : 1. doi: 10.1186/2046-4053-1-1 22587946

13. Bastian H, Glasziou P, Chalmers I. Seventy-five trials and eleven systematic reviews a day: how will we ever keep up? PLoS Med. 2010;7:e1000326. doi: 10.1371/journal.pmed.1000326 20877712

14. Gianola S, Gasparini M, Agostini M, Castellini G, Corbetta D, Gozzer P, et al. Survey of the reporting characteristics of systematic reviews in rehabilitation. Phys Ther. 2013;93 : 1456–1466. doi: 10.2522/ptj.20120382 23744458

15. Turner L, Galipeau J, Garritty C, Manheimer E, Wieland LS, Yazdi F, et al. An evaluation of epidemiological and reporting characteristics of complementary and alternative medicine (CAM) systematic reviews (SRs). PLoS ONE. 2013;8:e53536. doi: 10.1371/journal.pone.0053536 23341949

16. Tunis AS, McInnes MD, Hanna R, Esmail K. Association of study quality with completeness of reporting: have completeness of reporting and quality of systematic reviews and meta-analyses in major radiology journals changed since publication of the PRISMA statement? Radiology. 2013;269 : 413–426. doi: 10.1148/radiol.13130273 23824992

17. Moher D, Shamseer L, Clarke M, Ghersi D, Liberati A, Petticrew M, et al. Preferred reporting items for systematic review and meta-analysis protocols (PRISMA-P) 2015 statement. Syst Rev. 2015;4 : 1. doi: 10.1186/2046-4053-4-1 25554246

18. Shamseer L, Moher D, Clarke M, Ghersi D, Liberati A, Petticrew M, et al. Preferred reporting items for systematic review and meta-analysis protocols (PRISMA-P) 2015: elaboration and explanation. BMJ. 2015;349:g7647. doi: 10.1136/bmj.g7647 25555855

19. Higgins JPT, Green S, editors. Cochrane handbook for systematic reviews of interventions, version 5.1.0. 2011 Mar [cited 21 Apr 2016]. The Cochrane Collaboration. Available: http://handbook.cochrane.org/.

20. Agency for Healthcare Research and Quality. Methods guide for effectiveness and comparative effectiveness reviews. AHRQ Publication No. 10(14)-EHC063-EF. 2014 Jan [cited 21 Apr 2016]. Available: https://www.effectivehealthcare.ahrq.gov/ehc/products/60/318/CER-Methods-Guide-140109.pdf.

21. StataCorp. Stata statistical software, release 13. College Station (Texas): StataCorp; 2013.

22. Bland JM, Altman DG. Statistics notes. The odds ratio. BMJ. 2000;320 : 1468. 10827061

23. Stroup DF, Berlin JA, Morton SC, Olkin I, Williamson GD, Rennie D, et al. Meta-analysis of observational studies in epidemiology: a proposal for reporting. Meta-analysis Of Observational Studies in Epidemiology (MOOSE) group. JAMA. 2000;283 : 2008–2012. 10789670

24. Wells G, Shea B, O’Connell D, Peterson J, Welch V, Losos M, et al. The Newcastle–Ottawa Scale (NOS) for assessing the quality of nonrandomised studies in meta-analyses. 2014 [cited 24 Sep 2015]. Available: http://www.ohri.ca/programs/clinical_epidemiology/oxford.asp.

25. Sterne JA, Sutton AJ, Ioannidis JP, Terrin N, Jones DR, Lau J, et al. Recommendations for examining and interpreting funnel plot asymmetry in meta-analyses of randomised controlled trials. BMJ. 2011;343:d4002. doi: 10.1136/bmj.d4002 21784880

26. Moher D, Glasziou P, Chalmers I, Nasser M, Bossuyt PM, Korevaar DA, et al. Increasing value and reducing waste in biomedical research: who’s listening? Lancet. 2015 Sep 25. doi: 10.1016/S0140-6736(15)00307-4

27. Ioannidis JP, Chang CQ, Lam TK, Schully SD, Khoury MJ. The geometric increase in meta-analyses from China in the genomic era. PLoS ONE. 2013;8:e65602. doi: 10.1371/journal.pone.0065602 23776510

28. Nordic Cochrane Centre. Review Manager (RevMan), version 5.1. Copenhagen: Nordic Cochrane Centre; 2011.

29. Barendregt JJ, Doi SA. MetaXL, version 1.4. Wilston (Australia): EpiGear International; 2013.

30. R Development Core Team. R: a language and environment for statistical computing. Vienna: R Foundation for Statistical Computing; 2012.

31. Dewey M. CRAN task view: meta-analysis. 2015 Dec 18 [cited 3 Feb 2016]. Available: http://CRAN.R-project.org/view=MetaAnalysis.

32. Franzoni C, Scellato G, Stephan P. Science policy. Changing incentives to publish. Science. 2011;333 : 702–703. doi: 10.1126/science.1197286 21817035

33. Siontis KC, Hernandez-Boussard T, Ioannidis JP. Overlapping meta-analyses on the same topic: survey of published studies. BMJ. 2013;347:f4501. doi: 10.1136/bmj.f4501 23873947

34. Jones CW, Keil LG, Weaver MA, Platts-Mills TF. Clinical trials registries are under-utilized in the conduct of systematic reviews: a cross-sectional analysis. Syst Rev. 2014;3 : 126. doi: 10.1186/2046-4053-3-126 25348628

35. Hart B, Lundh A, Bero L. Effect of reporting bias on meta-analyses of drug trials: reanalysis of meta-analyses. BMJ. 2012;344:d7202. doi: 10.1136/bmj.d7202 22214754

36. Saini P, Loke YK, Gamble C, Altman DG, Williamson PR, Kirkham JJ. Selective reporting bias of harm outcomes within studies: findings from a cohort of systematic reviews. BMJ. 2014;349:g6501. doi: 10.1136/bmj.g6501 25416499

37. Chandler J, Churchill R, Higgins J, Lasserson T, Tovey D. Methodological standards for the reporting of new Cochrane intervention reviews, version 1.1. 2012 Dec 17 [cited 21 Apr 2016]. Available: http://editorial-unit.cochrane.org/sites/editorial-unit.cochrane.org/files/uploads/MECIR%20Reporting%20standards%201.1_17122012_2.pdf.

38. Stevens A, Shamseer L, Weinstein E, Yazdi F, Turner L, Thielman J, et al. Relation of completeness of reporting of health research to journals’ endorsement of reporting guidelines: systematic review. BMJ. 2014;348:g3804. doi: 10.1136/bmj.g3804 24965222

39. Smith TA, Kulatilake P, Brown LJ, Wigley J, Hameed W, Shantikumar S. Do surgery journals insist on reporting by CONSORT and PRISMA? A follow-up survey of ‘instructions to authors’. Ann Med Surg (Lond). 2015;4 : 17–21.

40. Barnes C, Boutron I, Giraudeau B, Porcher R, Altman DG, Ravaud P. Impact of an online writing aid tool for writing a randomized trial report: the COBWEB (Consort-based WEB tool) randomized controlled trial. BMC Med. 2015;13 : 221. doi: 10.1186/s12916-015-0460-y 26370288

41. PLoS Medicine Editors. From checklists to tools: lowering the barrier to better research reporting. PLoS Med. 2015;12:e1001910. doi: 10.1371/journal.pmed.1001910 26600090

42. Moher D, Altman DG. Four proposals to help improve the medical research literature. PLoS Med. 2015;12:e1001864. doi: 10.1371/journal.pmed.1001864 26393914

43. Tricco AC, Tetzlaff J, Sampson M, Fergusson D, Cogo E, Horsley T, et al. Few systematic reviews exist documenting the extent of bias: a systematic review. J Clin Epidemiol. 2008;61 : 422–434. doi: 10.1016/j.jclinepi.2007.10.017 18394534

44. Schunemann HJ, Wiercioch W, Etxeandia I, Falavigna M, Santesso N, Mustafa R, et al. Guidelines 2.0: systematic development of a comprehensive checklist for a successful guideline enterprise. CMAJ. 2014;186:E123–E142. doi: 10.1503/cmaj.131237 24344144

45. Whiting P, Savovic J, Higgins JP, Caldwell DM, Reeves BC, Shea B, et al. ROBIS: a new tool to assess risk of bias in systematic reviews was developed. J Clin Epidemiol. 2016;69 : 225–234. doi: 10.1016/j.jclinepi.2015.06.005 26092286

Štítky

Interní lékařství

Článek vyšel v časopisePLOS Medicine

Nejčtenější tento týden

2016 Číslo 5- Není statin jako statin aneb praktický přehled rozdílů jednotlivých molekul

- Magnosolv a jeho využití v neurologii

- Moje zkušenosti s Magnosolvem podávaným pacientům jako profylaxe migrény a u pacientů s diagnostikovanou spazmofilní tetanií i při normomagnezémii - MUDr. Dana Pecharová, neurolog

- Biomarker NT-proBNP má v praxi široké využití. Usnadněte si jeho vyšetření POCT analyzátorem Afias 1

- S prof. Vladimírem Paličkou o racionální suplementaci kalcia a vitaminu D v každodenní praxi

-

Všechny články tohoto čísla

- Epidemiology and Reporting Characteristics of Systematic Reviews of Biomedical Research: A Cross-Sectional Study

- Steroid-Based Therapy and Risk of Infectious Complications

- How Much Can the USA Reduce Health Care Costs by Reducing Smoking?

- Interpreting the Global Enteric Multicenter Study (GEMS) Findings on Sanitation, Hygiene, and Diarrhea

- Health Research and the World Humanitarian Summit—Not a Thousand Miles Apart

- A Public Health Paradox: The Women Most Vulnerable to Malaria Are the Least Protected

- Toward a Common Secure Future: Four Global Commissions in the Wake of Ebola

- The Clinical Challenge of Sepsis Identification and Monitoring

- All-Cause Mortality of Low Birthweight Infants in Infancy, Childhood, and Adolescence: Population Study of England and Wales

- Smoking Behavior and Healthcare Expenditure in the United States, 1992–2009: Panel Data Estimates

- Estimating the Risk of Chronic Pain: Development and Validation of a Prognostic Model (PICKUP) for Patients with Acute Low Back Pain

- Initiating Antiretroviral Therapy for HIV at a Patient’s First Clinic Visit: The RapIT Randomized Controlled Trial

- Prioritizing Surgical Care on National Health Agendas: A Qualitative Case Study of Papua New Guinea, Uganda, and Sierra Leone

- Effectiveness of and Financial Returns to Voluntary Medical Male Circumcision for HIV Prevention in South Africa: An Incremental Cost-Effectiveness Analysis

- Risk of Advanced Neoplasia in First-Degree Relatives with Colorectal Cancer: A Large Multicenter Cross-Sectional Study

- Common Infections in Patients Prescribed Systemic Glucocorticoids in Primary Care: A Population-Based Cohort Study

- Sanitation and Hygiene-Specific Risk Factors for Moderate-to-Severe Diarrhea in Young Children in the Global Enteric Multicenter Study, 2007–2011: Case-Control Study

- A Revolution in Treatment for Hepatitis C Infection: Mitigating the Budgetary Impact

- Nondisclosure of Financial Interest in Clinical Practice Guideline Development: An Intractable Problem?

- Financial Relationships between Organizations That Produce Clinical Practice Guidelines and the Biomedical Industry: A Cross-Sectional Study

- Prices, Costs, and Affordability of New Medicines for Hepatitis C in 30 Countries: An Economic Analysis

- PLOS Medicine

- Archiv čísel

- Aktuální číslo

- Informace o časopisu

Nejčtenější v tomto čísle- Estimating the Risk of Chronic Pain: Development and Validation of a Prognostic Model (PICKUP) for Patients with Acute Low Back Pain

- Prioritizing Surgical Care on National Health Agendas: A Qualitative Case Study of Papua New Guinea, Uganda, and Sierra Leone

- A Revolution in Treatment for Hepatitis C Infection: Mitigating the Budgetary Impact

- Toward a Common Secure Future: Four Global Commissions in the Wake of Ebola

Kurzy

Zvyšte si kvalifikaci online z pohodlí domova

Autoři: prof. MUDr. Vladimír Palička, CSc., Dr.h.c., doc. MUDr. Václav Vyskočil, Ph.D., MUDr. Petr Kasalický, CSc., MUDr. Jan Rosa, Ing. Pavel Havlík, Ing. Jan Adam, Hana Hejnová, DiS., Jana Křenková

Autoři: MUDr. Irena Krčmová, CSc.

Autoři: MDDr. Eleonóra Ivančová, PhD., MHA

Autoři: prof. MUDr. Eva Kubala Havrdová, DrSc.

Všechny kurzyPřihlášení#ADS_BOTTOM_SCRIPTS#Zapomenuté hesloZadejte e-mailovou adresu, se kterou jste vytvářel(a) účet, budou Vám na ni zaslány informace k nastavení nového hesla.

- Vzdělávání